Wealth managers are increasingly under pressure to keep up with the evolving regulatory landscape. The onboarding process is an area affected by …

Where to Start?

We began our journey into Intelligent Document Processing with a large healthcare contract 8 years ago. The challenge was to extract doctors’ instructions and clinical data out of long and messy letters. This was before practical availability of powerful machine learning algorithms (very different from today where there is too much choice or…noise in the market). Since then, we have successfully supported customers incorporating powerful solutions – leading to dramatic FTE savings, quicker processing and access to ‘new’ data at scale. This includes classifying incoming documents (often PDFs) and extracting data on investment portfolios, quotes from insurance brokers, trade confirmations, etc.

The technology is developing rapidly and the marketplace can seem confusing. In this blog I attempt to answer the question of ‘if we were starting today, where would we begin?’

Quick recap on terminology related to Intelligent Document Processing (IDP)

When thinking about automating document processing, you’ll need to consider classification and extraction. They can add value to processes independently or jointly.

Classification: Classification typically refers to the differentiation of documents based on their content. Some classification can be done with simple manually created rules (‘look for x in the title’) but with increasing complexity and documents/formatting changing over time, machine learning is the only effective approach. Our customers use classification models (=algorithms) to sort a wide variety of incoming docs, including quarterly portfolio reports, equity/debt offer documents, deal term sheets, monthly performance reports, etc. Once you know what type of document you have, it can more easily be (automatically) processed by the right team or be sent to the next domain specific algorithm.

Extraction: Extraction refers to taking some specific data out of an object (image, PDF text, word doc, etc) and putting it into a structured format. Again, building on the examples above, this could involve taking key terms from contracts, performance data from PDF reports, key trade dates and conditions, etc. These extraction models can often work better when designed specifically for a certain classification of document. Like people, it’s about the right algorithm for the right job.

Where to start…find a use case and decipher a muddled landscape

We are not going to discuss use case selection in this blog, other than to make the observation that you need to find and rank use cases based on business benefit and complexity. Start with those in the quadrant of high benefit and low/moderate complexity!

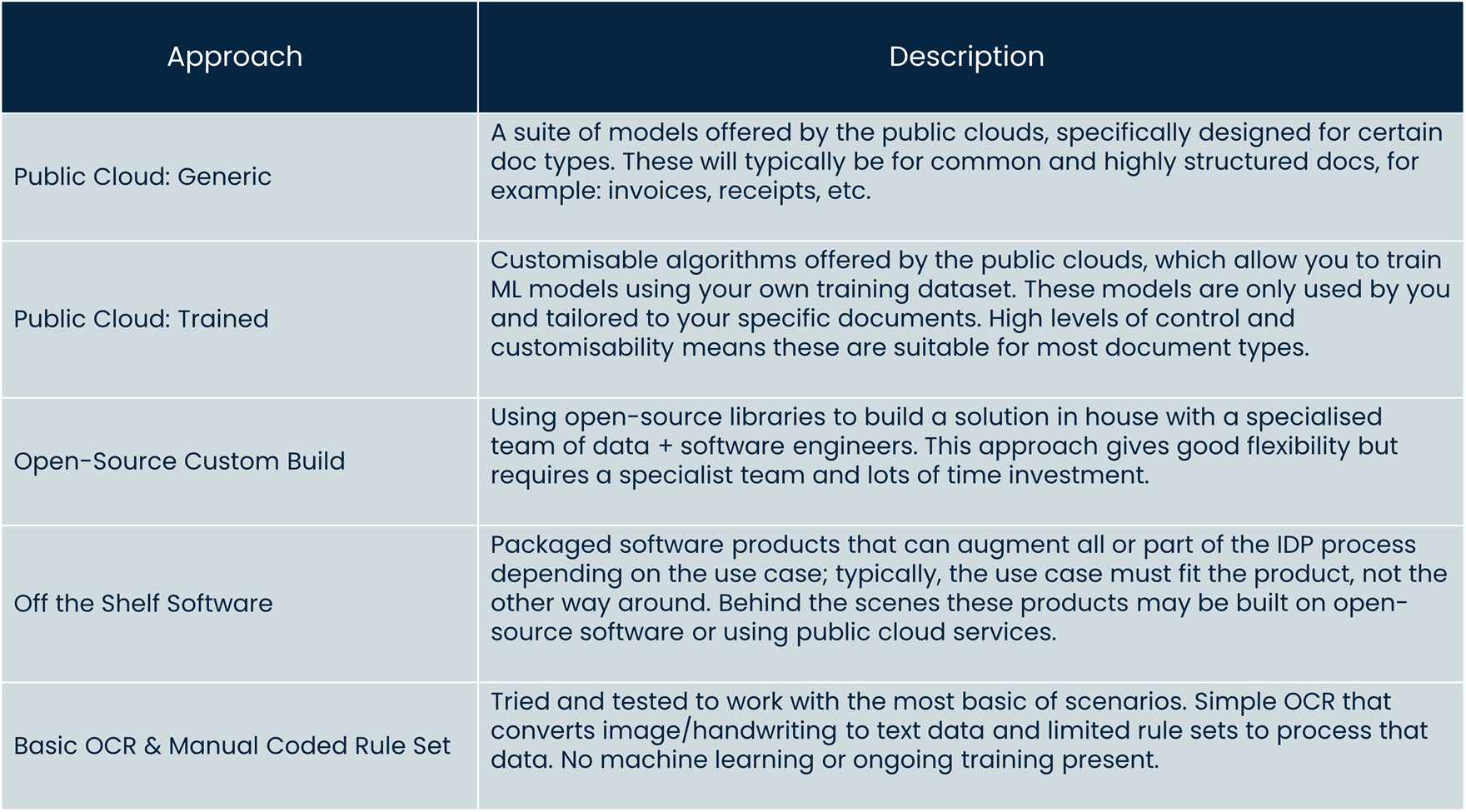

In terms of technology options, the first step is to get an understanding at a high level by completing some research. You’ll be met with a sea of options, in differing shapes and forms. Our view is that it’s usually sensible to start with an evaluation of the big public cloud offerings, while keeping in mind the alternative options.

Public cloud offers one of the quickest implementation times with low costs to prove a concept; you are also leveraging a huge amount of investment which few organisations can dream of matching. The ‘generic’ public cloud models are tried and tested and while useful for the docs they support, they offer quite a narrow set of use cases. Training your own model on public cloud is, in my opinion, clearly the best place to start.

Getting Going

When talking about public cloud you will likely know the big players. Between Google (GCP), Amazon (AWS) and Microsoft (Azure) there are a whole host of services on offer. We are primarily interested in the ‘Public Cloud: Trained’ model services, those are the custom models that we train with our customers’ datasets and are proprietary to them. These all use natural language processing (NLP) as the underlying approach and, depending on the use case, we use the following solutions for this:

- Microsoft: Form Recogniser\

- Google: AutoML (Transitioning to VertexML)

- Amazon: Amazon Comprehend

The choice of which to use will often come down to the current technology landscape you have/are adopting. We generally find that all are comparable in terms of accuracy.

For the discussion below, we have chosen Google as we have found this relatively simple to work with and we have been impressed by the results. It’s worth noting you don’t have to stick with 1 provider of course, all of these services are designed to be used as microservices and can be integrated with (almost) any other solution.

The documents we’ve chosen are Quarterly Performance Reports sourced from email attached PDFs.

Training The Models

Our training approach is to be as automated as possible. If you have the historic data, in the correct structure, then building a utility that will create the training set (in this case JSONL file(s)) from that data is of course preferable. The alternative would be to manually label the documents, which depending on the size of the training set and complexity of documents may not be appropriate. Our experience tells us that you will need at least 100 labelled docs for your model.

A word on retraining and Human in the Loop

Retraining/iteratively training models is a key part of improving the algorithm over time. The two approaches here are to manually retrain periodically, vs ongoing human in the loop training.

Periodic, manual training is simply reviewing results and manually labelling up new items to add to the training set on a set schedule, say quarterly.

By human in the loop, I am referring to building continuous training into the business process. Staff are already being paid to correct, validate & input data – why not take ML into this to build a highly accurate model over time, almost for free.

Evaluating & Running the Models

The evaluation of these models is inherently part of the training, along with the validation and creation itself.

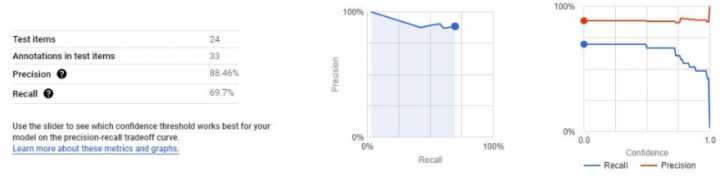

We created the model below by providing a training set and an evaluation set (of labels, or docs to use layman’s terms). The evaluation set essentially attempts to find the best possible neural network to apply to the training set. Once trained, a test set is run, and results generated.

Our New ‘First’ Model

Reading the Results

When analysing models, the two key metrics to consider are precision and recall, so what do they mean?

Precision: This is the comparison of positives within a model. It looks at the percentage of true positives against false positives.

So a model of 80% precision would mean that 80 % of the positive predictions were correct:

100 items were predicted to be positive, however 20 of them should have been negative.

Recall: Recall is the flip to precision, so recall looks at the percentage of false negatives, that is missed correct values if you will.

So a model of 80% recall would mean that 80% of the negative predictions were correct:

100 labels were predicted to be negative, however 20 should have been positive

It’s clear that both the above are important to consider, and whether false positives or false negatives are a preference will depend on the use case in question.

Use case for high precision: spam emails

A classic example would be to look at spam emails. Let’s say a model wants to find and delete spam. In this case a false negative would result in a spam email not being deleted, whereas a false positive could delete an important email. Therefore, high precision is more important.

Use case for high recall: identifying chronic health conditions

If we now look at a model that attempts to flag chronic health conditions as urgent. For this a false positive would mean we incorrectly flag a non-serious condition as chronic, not too bad – a slight admin overhead. A false negative would mean missing an actual chronic condition. This would therefore be a model to heavily prioritise recall.

With that in mind, you can see how our model evolved as we added more data to the training set. Recall and precision trade-offs could be made by changing our required confidence threshold. Higher confidence requirements naturally lower recall but increase precision.

The key point to make with this model is the speed to get to something with a perfectly acceptable level of ‘accuracy’ (F1 if we talk data science). The reality is that within weeks (not months or years) something like this can easily be achieved with the right know-how.

Key Insights

The Top 3 Success Criteria

1. Making the right technical decision: there is a lot of noise in this space, and frankly a lot that won’t work. Choosing the right approach is a big part of being successful.

a. Public cloud is fast, accurate and very cost effective for small/medium scale projects. We believe it’s the best way to get going on your journey.

b. Other tech approaches exist, if public cloud doesn’t fit your strategy. Explore these options, but be aware they come with higher risk and likely effort/cost.

c. Early engagement with your Security & Data Privacy colleagues to understand their requirements is crucial. This might take you down a particular path and save you significant evaluation / R&D time.

d. If your requirements are highly complex, consider building multiple models each tailored to solve a specific part of the extraction/transform, and don’t rule out augmenting with traditional ETL to get the final structure/result right.

2. ‘Safety’ of the solution. When do you take the stabilisers off? Possibly never depending on your precision/recall requirements. Is 95% enough for your business use case, , accepting that 5% will be ‘lost’ (bear in mind humans are not perfect)? Or do you need sophisticated monitoring and testing of outputs to catch errors as you move towards 99.9%?

a. Ensure you’ve considered ‘human in the loop’ in your model implementations and be aware of what your end goal is.

3. Operationalising the whole thing. You will have heard the term MLOps, referring to the DevOps of the ML world. Embedding an effective MLOps solution into the real/ongoing business process is essential to keep you models competitive (i.e. don’t let the Blackrock’s of this world leave you in the dust with their ever-advancing models).

a. The strongest possible model will have the most recent and accurate data in its training set

b. Your models will likely degrade over time if not maintained. This is largely due to something called data drift, essentially your model inputs slowly changing over time, causing old data that the model was built on to become less relevant.

Insights

Automation & Digital Transformation In Asset Management

“Asset managers are harnessing the tools, expertise, and infrastructure needed to turn data into actionable insights that can drive growth in investments …

Business improvement through automation is a strategic investment. So it comes as no surprise, we often get asked what is best practice …

Want To Know More About Our Technology Stack?

We’ve Made It Easy For you

Just click on the Capabilities button and it will take you straight there.

Tech & Data: Why Is Everything Taking So Damn Long?

In my discussions with senior leaders of Alternative Asset Management firms, I often hear the same refrain: “why is it taking so damn long?” or “when am I actually going to see return on my tech or data investment?”

Want a solution? Then check out these 6 Rules.